Part of the Endoscopic Vision Challenge

Welcome to the Robust Endoscopic Instrument Segmentation Challenge 2019 (ROBUST-MIS) as a part of the MICCAI 2019!

Challenge design

The whole challenge design, including information on the organization, data and evaluation, was brought together in this comprehensive document:

Introduction

Intraoperative tracking of laparoscopic instruments is often a prerequisite for computer and robotic assisted interventions. Although previous challenges have targeted the task of detecting, segmenting and tracking medical instruments based on endoscopic video images, key issues remain to be addressed:

Robustness: The methods proposed still tend to fail when applied to challenging images (e.g. in the presence of blood, smoke or motion artifacts).

Generalization: Algorithms trained for a specific intervention in a specific hospital typically do not generalize.

The goal of this challenge is, therefore, the benchmarking of medical instrument detection and segmentation algorithms, with a specific emphasis on robustness and generalization capabilities of the methods. The challenge is based on the biggest annotated data set made (to be made) available, which comprises 10,000 annotated images that have been extracted from a total of 30 surgical procedures from three different surgery types.

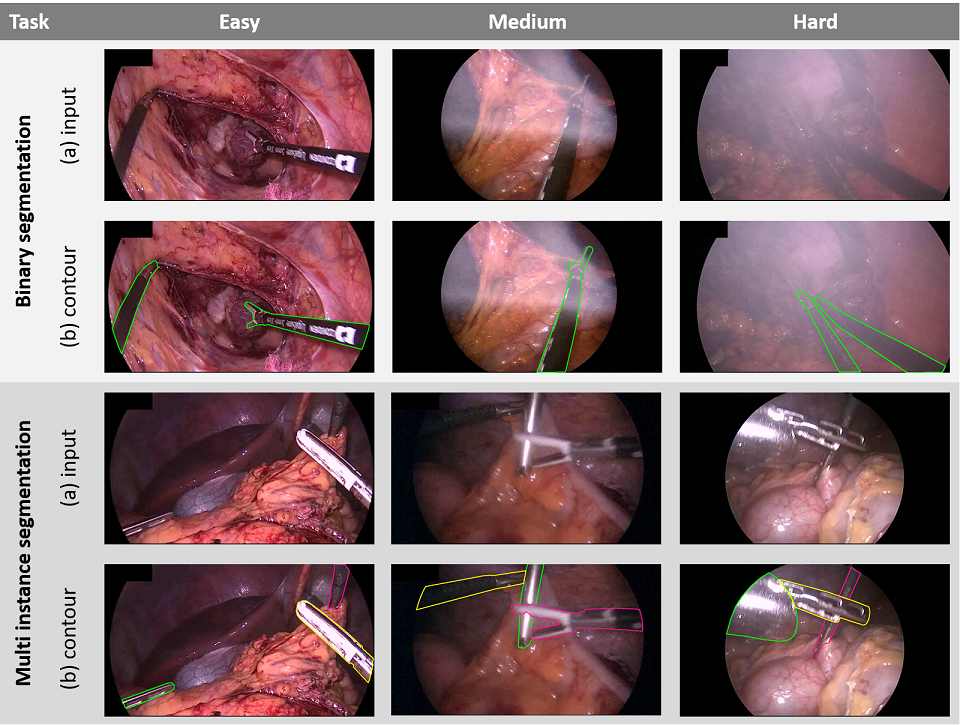

As illustrated in the figure above, images represent various

levels of difficulty for the tasks binary

segmentation (two upper rows) and multiple instance segmentation (two lower rows). For both tasks, input frames (a) are provided along with the reference segmentation masks from which the contours (b) are shown in the figure.

Tasks

Participating teams may enter competitions related to three different tasks:

Task 1: Binary segmentation

- Input: 250 consecutive frames (10sec) of a laparoscopic video.

- Output: a binary image. A "1" represents a medical instruments in the last frame.

- Ranking: Based on Dice Similarity Coefficient (DSC) and Normalized Surface Dice (NSD) [1,4].

Task 2: Multiple instance detection

- Input: 250 consecutive frames (10sec) of a laparoscopic video.

- Output: an image, in which "0" indicates the absence of a medical instrument and numbers "1", "2",... represent different instances of medical instruments in the last frame.

- Output processing per frame: For each instrument instance in the reference output (represented by a specific ID > 0) and each instance in the participant’s output, the Intersection of Union (IoU) is computed. The Hungarian algorithm is then used to assign participants’ instances to reference instances all pairs of instances with IoU > 0.3 serving as candidates.

- UPDATE! Ranking: Based on the mean average precision (mAP) [2,3].

- Important information: Note that the instruments in the reference data represent exact instrument contours. Hence, to maximize the probability of a hit (IoU > 0.3), it is useful to represent instances with segmentation masks rather than boxes.

Task 3: Multiple instance segmentation

- Input: 250 consecutive frames (10sec) of a laparoscopic video.

- Output: an image, in which "0" indicates the absence of a medical instrument and numbers "1", "2",... represent different instances of medical instruments in the last frame.

- Ranking: Based on multiple instance DSC and multiple instance NSD [4]

For all tasks, the entire video of the surgery is provided along with the training data as context information. In the test phase only the test image along with the preceding 250 frames are provided.

Validation

Validation will be performed in three stages:

- Stage 1: The test data is taken from the procedures (patients) from which the training data were extracted.

- Stage 2: The test data is taken from the exact same type of surgery as the training data but from a procedure (patient) not included in the training data.

- Stage 3: The test data is taken from a different (but similar) type of surgery (and different patients) compared to the training data.

Details of the challenge design as well as annotation instructions provided to the annotators will be released shortly.

Synapse

重要

The challenge is hosted on the Synapse platform and can be found here. Please register, sign the challenge rules (can be found there) and start!

References

[1] Dice, L. R. Measures of the amount of ecologic association between species. Ecology 26, 297-302 (1945).

[2] Everingham, M., Van Gool, L., Williams, C. K. I., Winn, J., and Zisserman, A. The Pascal Visual Object Classes (VOC) Challenge. International Journal of Computer Vision 88 (2): 303–38 (2010).

[3] Hui, J. mAP (mean Average Precision) for Object Detection (2018). at https://medium.com/@jonathan_hui/map-mean-average-precision-for-object-detection-45c121a31173.

[4] See http://medicaldecathlon.com/ (Assessment Criteria)